The Strange Case of AI & Automation

In an era where “AI” has become a pervasive buzzword, the term is often slapped onto any project that demonstrates even a small degree of automation. This tendency creates confusion, blurring the lines between true artificial intelligence and simple, rule-based systems.

We frequently see straightforward automated tasks being mislabeled as AI — and it’s worth exploring this distinction.

The Anatomy of a “Smart” Bin

A perfect case study for this confusion is a common DIY project: the so-called “smart” trash bin.

Typically, such a project involves three main components:

- An Arduino board to act as the brain

- An ultrasonic sensor to detect an object (like a hand or trash)

- A servo motor to open and close the lid

The logic is simple:

The ultrasonic sensor continuously measures the distance to the nearest object. When something comes within a predefined range (the stimulus), the Arduino sends a signal to the servo motor to open the lid. Once the object is gone, the lid closes.

While undeniably smart and convenient, this is a classic example of rule-based automation, not Artificial Intelligence. The system isn’t learning, adapting, or reasoning — it’s just executing a hardcoded set of instructions.

// A simple, rule-based logic

if (distance_from_sensor < threshold_value) {

// Action: Object is close. Open the bin lid.

servo.rotate(180);

} else {

// Action: No object. Close the bin lid.

servo.rotate(0);

}

This logic follows a fixed rule and will perform the same action every single time the condition is met — no “intelligence” involved.

Is the Hardware an Overkill?

For a task as simple as detecting presence, the choice of hardware in such projects can often be considered overkill.

- The Sensor:

An ultrasonic sensor measures precise distances, but a smart bin doesn’t need that level of accuracy. All it needs is a binary response — something is there or nothing is there.

A simpler, cheaper IR proximity sensor would work perfectly for this yes/no detection. - The Board:

The Arduino is great for beginners and multi-purpose projects, but for a single-task automation like this, it’s more than what’s needed.

A compact and efficient ESP32 could handle this job just as well — with the added advantage of built-in Wi-Fi and Bluetooth, which could enable future upgrades (for example, sending a notification when the bin is full).

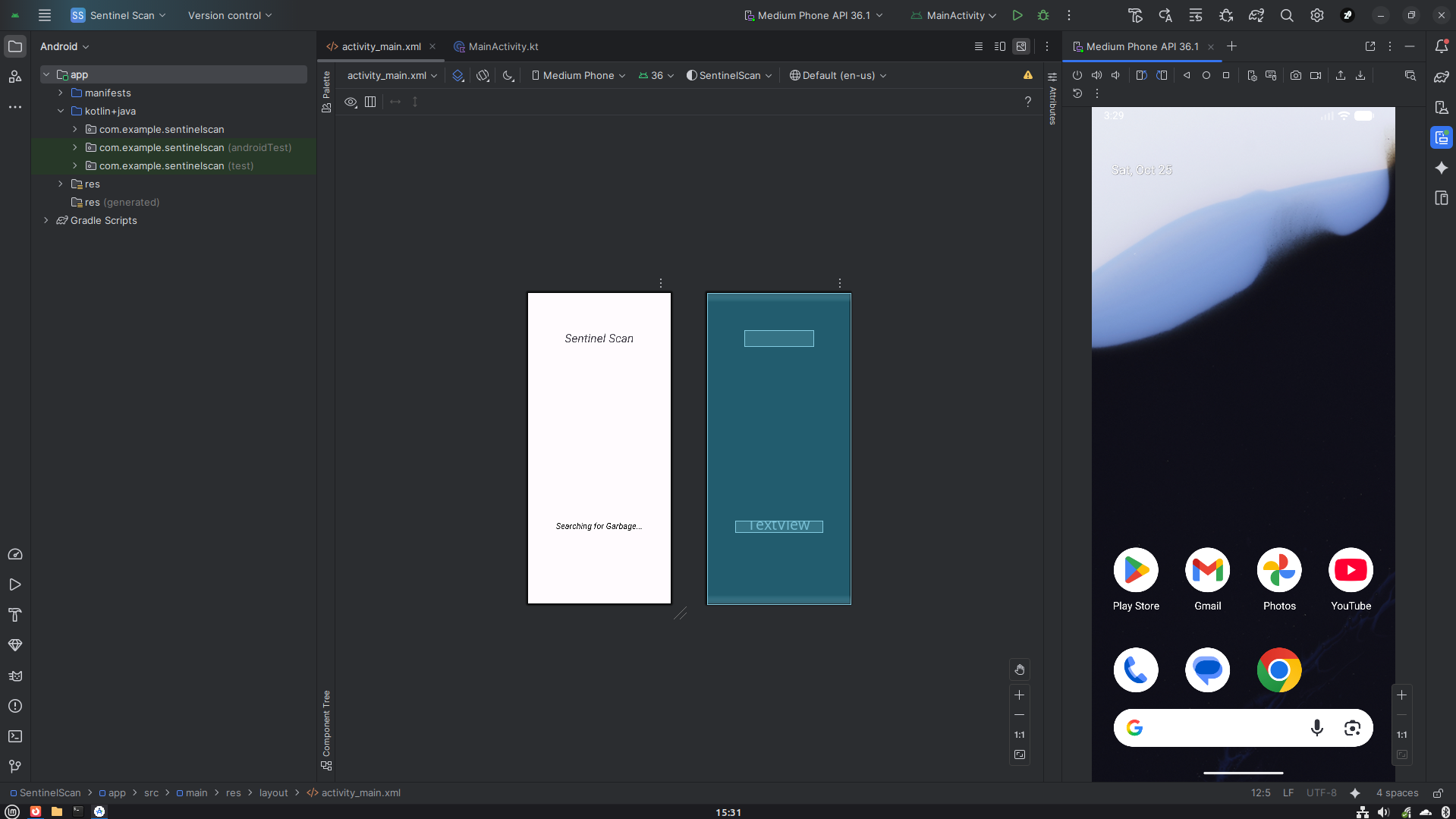

A Modern Analogy: The “Sentinel Scan” App

To simplify the concept even further, the same logic can be replicated on something we all have — our smartphones.

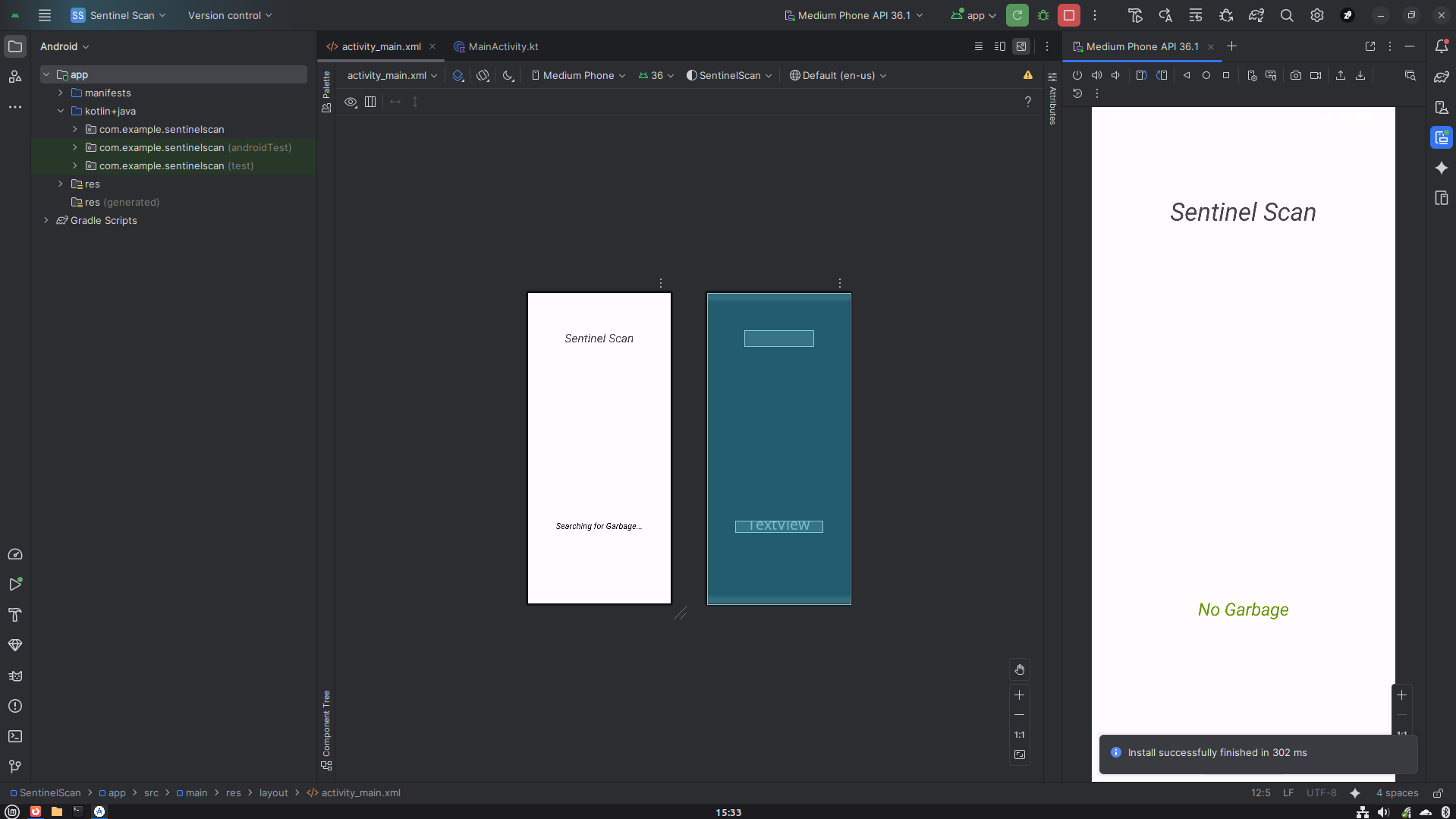

This was elegantly demonstrated in an Android app called “Sentinel Scan”, developed by Pranav Sharma using Android Studio. The app essentially turns your phone into a simple object detector.

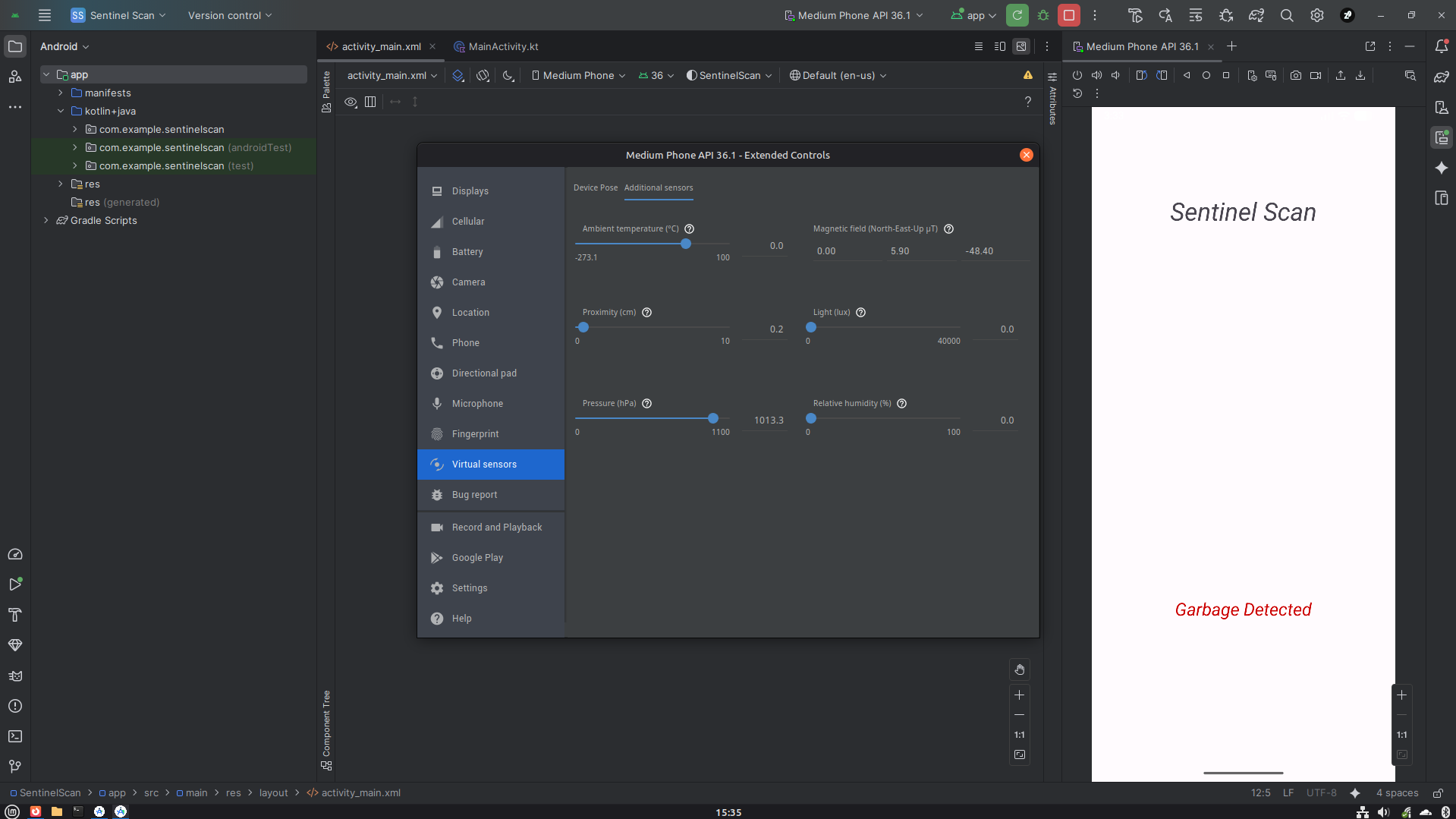

- It uses the phone’s built-in proximity sensor — the same one that turns off your screen during a call.

- When you wave your hand over the sensor, the app detects a change.

- Instead of rotating a servo motor, it simply changes the on-screen text to “Garbage Detected”.

- When your hand is removed, it switches back to “No Garbage.”

“This is a basic rule-based automation, not AI.”

This example perfectly mirrors the logic behind the so-called smart bin. The underlying mechanism is a simple conditional rule, not a learning or thinking machine.

Conclusion

It’s exciting to build systems that respond to their environment — and automation truly makes our lives easier. However, it’s crucial to use the right terminology.

True Artificial Intelligence involves concepts such as machine learning, neural networks, and adaptive decision-making — systems that can learn from data, adjust to new inputs, and predict outcomes.

Rule-based automation, on the other hand, is about executing predefined instructions. It’s not lesser — just different.

By understanding this distinction, we not only respect the complexity of genuine AI but also learn to appreciate the elegant simplicity of well-crafted automation.

SensorEventListener correctly captures this hardware input, and the onSensorChanged method executes its conditional logic, resulting in a dynamic UI update to 'Garbage Detected'. This validates the end-to-end data flow from sensor to screen, proving the concept.

Download

[customlink src="/media/files/sentinel-scan-by-pranav-sharma.apk" heading="Project Source" description="Try this APK in your device to check" size="5MB"]